Thoughts on AI

Everyone is using Artificial Intelligence (AI) for everything these days, even structural engineers. I see the junior engineers in the office using ChatGPT on close to a daily basis. Even some of the senior ones can be observed quickly closing a suspiciously ChatGPT like browser tab every now and then. But AI comes with some traps for the unwary and this post aims to point (some of) them out.

Do AI Engineers Dream of Simply Supported Sheep?

Any good structural engineer should be able to give the formula for bending moments in a simply supported beam without hesitation. $\frac{PL}{4}$ and $\frac{wL^{2}}{8}$ should haunt their dreams, and they should be able to draw the moment diagram in their sleep. But do AI engineers dream in bending moment diagrams?

It turns out that maybe they do, but it’s still just a dream (or a nightmare!).

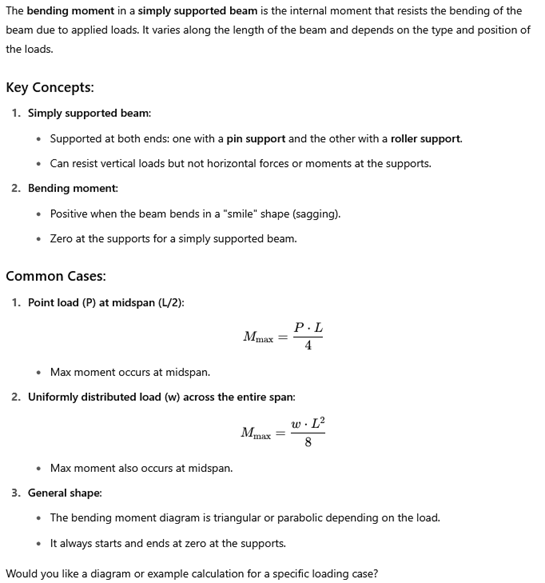

When prompted “What is the bending moment in a simply supported beam?” ChatGPT gave the following answer:

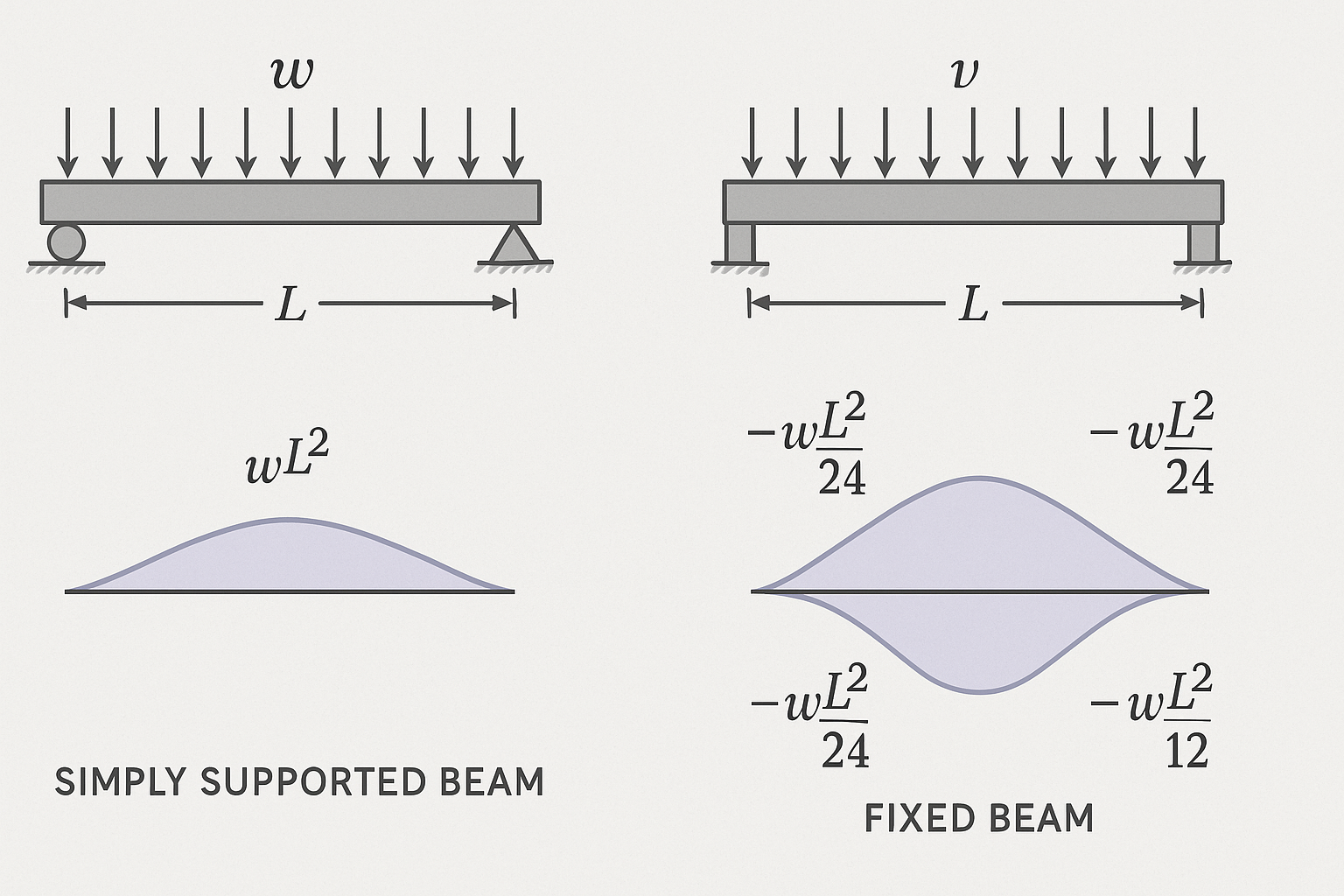

The formulas are correct. Also, the text explanation is (mostly) correct (there are at least 2x errors). A few prompts further including a request to draw the bending moment diagram and I got this:

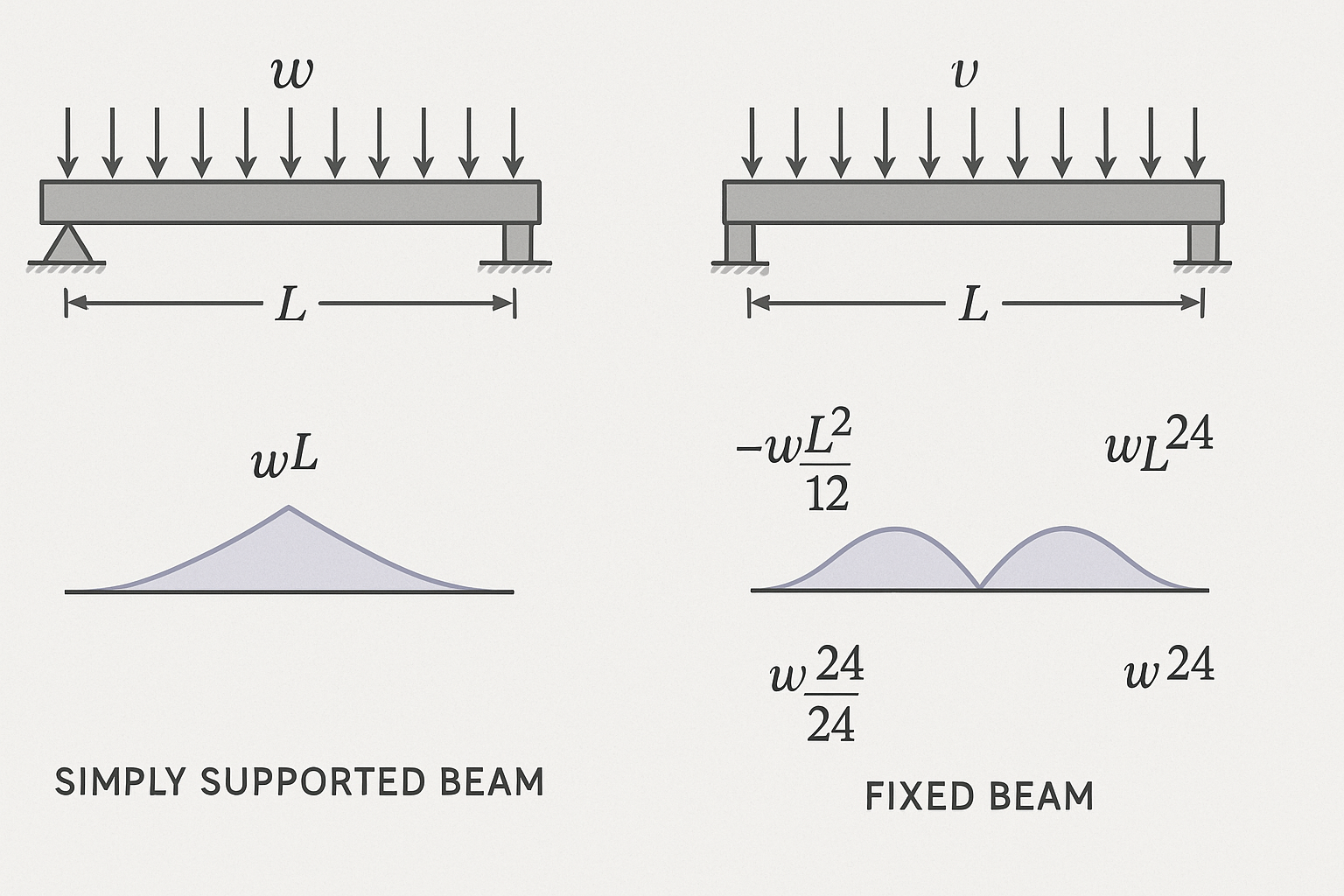

Which isn’t what I was hoping for. When told it was incorrect and to try again, ChatGPT gave me this:

This is wildly wrong. The shapes are wrong, the equations are wrong and the notation is different for simply supported and fixed-ended beams (e.g. $w$ and $v$ are both used for the applied load). At this point I gave up.

Using a more design focussed example, when requested to calculate the bending capacity of a 3.600m long 410UB54, ChatGPT gave a section capacity $\phi M_{sx} = 175 \text{kNm}$ and a member capacity $\phi M_{bx} = 140 \text{kNm}$. The real capacity is approximately $\phi M_{sx} = 304 \text{kNm}, \phi M_{bx} \approx 180 \text{kNm}$. ChatGPT has errors of over 100%!

Probabilistic vs Deterministic

So what’s going on? why does ChatGPT give correct answers that seem like magic (the first case above), followed by dumb or just plain weird answers? The key is that most modern AIs are not deterministic. They are probabilistic.

If you ask an AI to design a beam for you, they aren’t trying to solve the problem “what do the equations in the code require”. They are trying to solve the problem “what is the statistically most likely string of characters/tokens to follow the question ‘design this beam’” (or equivalent for images etc.). These are not the same thing.

As an aside, if you want a detailed overview of what’s going on inside ChatGPT or similar GPT based AIs, see What is ChatGPT Doing and Why Does it Work by Stephen Wolfram.

This explains why sometimes the AI results seem like magic. There are probably thousands of websites and teaching resources that describe the moments in simply supported beams and other basic structural configurations. It is easy to see why the most probable token to follow “what is the moment in a simply supported beam?” is $\frac{PL}{4}$.

However when the question is “draw the matching diagram”, things get a lot more difficult. While text and formulae follow specific conventions, diagrams are much less likely to follow standard conventions. Even if every piece of training data that includes the formula also includes a diagram, it is likely there is wide variety in things such as how restraints are drawn, is the line on the tension or compression side, scaling of the diagrams, what color (or black and white) is used etc. All these variations would make it harder to draw a “correct” diagram based on the most likely next token.

And if you ask a very specific question - e.g. “what is the moment capacity of a 3.6m long 410UB54 as per AS4100?” there is likely to be very little or no training data. The AI may be able to extrapolate somewhat - “AS4100” will probably suggest Australian context for the answers. But beyond that the extrapolation is likely to be (wildly) inaccurate.

In comparison, design codes are all deterministic, not probabilistic. Even when they involve probability the answer is still determined by a set of equations. Given a mean and a standard deviation, the normal curve and its percentiles are perfectly determined. You can’t demonstrate compliance with the code requirements using the outcome of a LLM which feels no more obligation to follow the code than Jack Sparrow did.

To make things worse, AI tools often include deliberate randomization of the results (beyond the inherent randomness of their training data). This is called the “temperature” of the model (see here). Occaisonally picking a less likely next token seems to give more realistic answers, at the cost of accuracy. Worse, because the answers seem more realistic, it can be hard to tell they are nonsense. These hallucinations have already had serious real world consequences for AI users.

Needless to say, random, less than optimal answers are not what you want when designing a bridge!

This may change over time as more deterministic tools get added to AI. But even if ChatGPT buys a CHECKSTEEL license, the input into CHECKSTEEL will still be probabilistic.

The Problem of Context

Another issue is the context of the question. What you ask the AI is usually something like “design this beam”. The real question an engineer is asking is usually something more like:

“Design a 3.6m beam to support this particular conveyor head pulley and drive. The bending restraints are probably FF, but if the beam gets really deep maybe I should use PP to be safe. Given the possible need for stiffeners in the web maybe a heavier beam that is inefficient for pure bending loads but that lets us get rid of the stiffeners will be more cost effective. But maybe it won’t - maybe stiffeners are the right answer. It also needs to be deep enough for the end connection. And do we use an offset end plate that can slide in between the flanges of the supporting beams easily, or do we use web side plates that have less fabrication but may be harder to install? Also, I know the site is already planning that next upgrade and they are taking about an extra conveyor which will also use this same beam, so if it’s not that much extra cost maybe allowing for that now makes sense. And how do they actually intend to install this? Is it being lifted up as part of a larger module or being stick built? Where is the best spot for crane access and how does that affect the size of this beam? By the way the client has an irrational hatred of UBs so better make it a UC or a welded section. And make sure to think about corrosion resistance! And a thousand other things you see on the drawings but can’t put into words, or have hear verbally from the client and no longer remember except in your subconscious. And you need to be able to document the result in a way that would satisfy the client and (hopefully not) a lawyer if something ever goes wrong.”

Some of these factors can be input into a prompt. However, by the time you’ve tried to put all of them in you probably could have designed the beam by yourself already.

Also, much of the context can only come from visiting the site in the real world (especially in brown field work like I do). Until we live in an I-Robot world this context will remain outside the ability any AI model to include.

Am I An AI Luddite?

No! I’m not an AI Luddite. In fact, this post was partly written using Cursor and in my recent blog rewrite Cursor and its AI functions did most of the work. The new blog logo is from ChatGPT. I have even been known to ask ChatGPT to give me a summary of an engineering topic or design procedure.

This also shouldn’t be seen as an Artifical General Intelligence (AGI) Skeptic spouting off (although I am an AGI skeptic - that’s for another time). For the moment, even if AI turns out to be everything its boosters say it will be, and the singularity leads us all into the sunlit uplands (or turns us into biological batteries for the matrix), it isn’t yet. So take this as a call to use it responsibly, while we wait for the utopian future promised by our AI overlords.

How To Use AI Responsibly

Using AI responsibly is really no different than using any other source of information - your boss, your textbook or your mum. So how do you know you can trust their information?

Can you check it? Some things are easy to check. If someone says the bending moment in a beam is $x$, $\frac{Pl}{4}$ it. Some things are hard to check but should be checked regardless. This is why 3rd Party Design Reviews exist for important structures, QA plans exist for welds and why concrete test cylinders are broken. If you’re designing an airplane, double check your calculations, and then triple check by testing a prototype.

Are you experienced in the field of interest? Through years of experience you have developed knowledge of what works and what doesn’t in structural engineering. This is the famous “engineering judgement” your first boss was always telling you about. If you know what you should expect, when AI tells you something different you can spot it straight away. And if you don’t have experience, go back to step 1.

Is the information from a valid authority? Argument from authority is a logical fallacy. However, it’s only an informal fallacy. As a practical working engineer I don’t have time to check everything from scratch myself and I’m sure you don’t either. Relying on trusted authorities like Australian Standards (Standards Australia), industry organisations (e.g. Steel Australia) and well regarded textbooks is necessary to get things done. These authorities also have incentives (legal obligations, the possibility of future sales or my membership fees!) to provide accurate information.

How does this apply to AI?

Can you check it: When ChatGPT created the logo for this blog I didn’t need to go and find an expert in graphic design. I could just look at the logo and see if I liked it. If it’s easy to check, use AI and check the results. Hand calculations will still be as relevant in the 21st century as they were in the 19th.

Are you experienced? Does AI give you an answer that makes sense based on what you know the result should look like? Is the number within an order of magnitude of your expectations? Draw the expected diagram, and compare it to what the AI says.

Can you check it with a trusted source? Look up the standard or a good reference textbook. Ask the AI for its sources and check if they exist. Are they reputable? Do they say what the AI thinks they said?

Some of the issues raised above may become less important as AI develops. “Bolted on” tools may address some of the issues with randomness and improvements in training data volume and quality may improve its ability to answer specific questions. Improvements in AI may make some of this redundant, or full AGI may emerge and put us all out of a job. But for the moment, treat AI output with the same skepticism you’d give to the greenest of graduates.